Inclusive & Assistive Products

ISDN2001/2002: Second Year Design Project

Why is it important?

The rapid development of modern web services, often accelerated by frameworks like FastAPI, necessitates a proactive approach to ensuring foundational stability and resilience from the earliest stages. A critical component in this architecture is the reverse proxy, which serves as the frontline defense against malformed requests and a key enabler for handling concurrent user traffic. This study conducts a comparative analysis of leading open-source reverse proxy solutions (e.g., Nginx, Caddy, HAProxy) to evaluate their efficacy in protecting a backend FastAPI application. Utilizing a controlled local environment with low-resource virtual machines, the research simulates realistic operational challenges.

.png)

Methodology

This study employs a comparative experimental approach to evaluate the performance and resilience of different open-source reverse proxy solutions when fronting a FastAPI application. The methodology is designed to simulate realistic operational challenges, specifically focusing on the proxies' ability to handle high concurrent connections, mitigate malformed HTTP requests, and maintain stability under adverse network conditions.

Environment Setup

All experiments were conducted in a controlled local environment to ensure reproducibility and minimize external variables. The setup consisted of two low-resource virtual machines (VMs) with the following specifications:

VM 1 (Application Server)

-

Operating System: Rocky Linux 9.5

-

CPU: 1 vCPU

-

RAM: 1 GB

-

Application: A FastAPI application developed for this study, featuring representative endpoints:

-

A simple, fast-responding endpoint, /fast, for baseline throughput testing.

-

An I/O-bound endpoint, /io_bound, simulating database or external API call latency using asyncio.sleep().

-

A POST endpoint, /data, designed to accept JSON payloads, used for testing malformed request handling.

-

Web Server: Uvicorn, configured with 2 worker processes.

-

VM 2 (Reverse Proxy Server)

-

Operating System: Rocky Linux 9.5

-

CPU: 1 vCPU

-

RAM: 2 GB

-

Reverse Proxies: The following reverse proxy software was deployed sequentially as Docker containers for testing. Official Docker images were utilized for each proxy:

-

Nginx 1.27

-

Caddy 2.10

-

HAProxy 3.2.0

-

The above VMs were deployed on a physical machine with the following specifications

-

CPU: AMD Ryzen 9 7940H w/ Radeon 780M Graphics

-

GPU: AMD Radeon 780M Graphics

-

RAM: Crucial DDR5 5600MT/s 16GB x2 (Module Timing 46-45-45-90)

-

NVMe: Predator SSD GM7 1TB

-

OS: Windows 11 24H2

-

Hypervisor: VMware Workstation 17 Pro

Basic configurations were applied to each proxy container to forward requests to the FastAPI application on

VM 1. Configuration files were typically volume-mounted into the containers.

Specific configurations related to request buffering, timeout settings, and security headers were kept as default or minimally adjusted for basic functionality, unless a specific feature related to malformed request handling was being tested.

The two VMs were networked on a private virtual network to isolate traffic and allow for controlled network manipulation.

Tools

Load Generation (Apache JMeter 5.6.3)

-

JMeter was used to generate HTTP traffic towards the reverse proxy server. Test plans were designed to simulate:

-

Varying levels of concurrent users.

-

Different request patterns (GET, POST).

-

Well-formed and deliberately malformed HTTP requests.

Network Problem Simulation (Clumsy 0.3)

-

Clumsy was utilized to introduce network degradation between the load generator (JMeter client) and the Reverse Proxy VM (VM 2). Simulated conditions included:

-

Packet latency.

-

Packet loss.

Network problems between the Reverse Proxy VM and the Application Server VM were not simulated, assuming a reliable, low-latency network path typical of same-zone VPC deployments in cloud environments.

System Monitoring

-

Standard operating system utilities (e.g., top, htop, vmstat on Linux) were used to monitor CPU and memory utilization on both VMs during test execution. Log files from the reverse proxies and the FastAPI application (via Uvicorn) was also collected for analysis.

Test Scenarios and Procedures

Baseline Performance Test

-

Objective: Establish baseline throughput (Requests Per Second - RPS) and response times under normal conditions with well-formed requests. Procedure: JMeter was configured to send a gradually increasing load of concurrent GET requests to the /fast and /io_bound endpoints for a sustained period (e.g., 5-10 minutes). Network: No Clumsy induced network issues.

High Concurrency Test

-

Objective: Determine the maximum concurrent load each proxy setup can handle before significant performance degradation or errors occur.

-

Procedure: Similar to the baseline test, but the number of concurrent users was progressively increased until error rates exceeded a predefined threshold (e.g., 1%) or response times became unacceptable (e.g., >2 seconds average).

-

Network: No Clumsy induced network issues.

Malformed HTTP Request Handling Test

-

Objective: Evaluate the reverse proxy's ability to identify, block, or sanitize malformed requests, and the FastAPI application's response if such requests pass through.

-

Procedure: JMeter was configured to send a mix of valid requests and various types of malformed requests at a moderate concurrent load to the /data (POST) endpoint and other relevant endpoints. Malformed requests included:

-

Incorrect Content-Type headers.

-

Malformed payload.

-

Excessively large request headers.

-

Network: No Clumsy induced network issues.

-

Analysis: Proxy logs were examined for error codes and actions taken. FastAPI logs were checked for exceptions or graceful error handling (e.g., HTTP 400, 422 responses).

Network Disruption Resilience Test

-

Objective: Assess the system's performance and stability when subjected to network latency and packet loss under a significant concurrent load (e.g., 70-80% of the determined high concurrency limit).

-

Procedure:

-

A sustained load was applied using JMeter.

-

Clumsy was used to introduce predefined levels of latency (50ms, 100ms, 200ms) and packet loss (1%, 5%, 10%) for 2 minutes.

-

The network disruption was then removed, and the system's recovery was observed under continued load.

-

Endpoints: Primarily focused on the /io_bound endpoint, which is more sensitive to network issues, but also included /fast.

Metrics Collection

-

The following metrics were collected for each test scenario and reverse proxy configuration:

-

Performance Metrics (from JMeter):

-

Throughput (Requests Per Second - RPS).

-

Average, median, 90th, and 99th percentile response times.

-

Error rates (count and percentage).

-

Resource Utilization (from OS monitoring tools):

-

CPU usage (average and peak) on both VMs.

-

Memory usage (average and peak) on both VMs.

Data Analysis

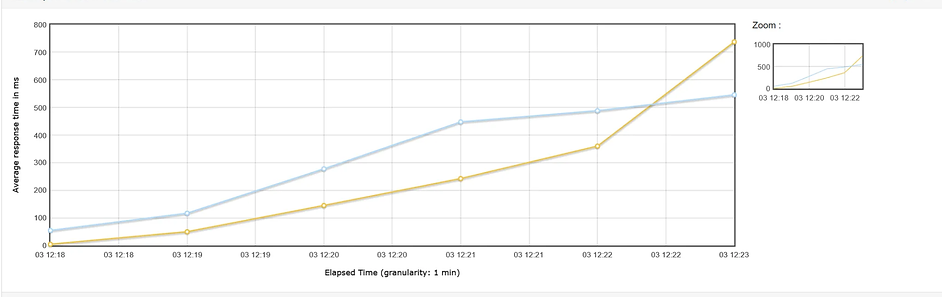

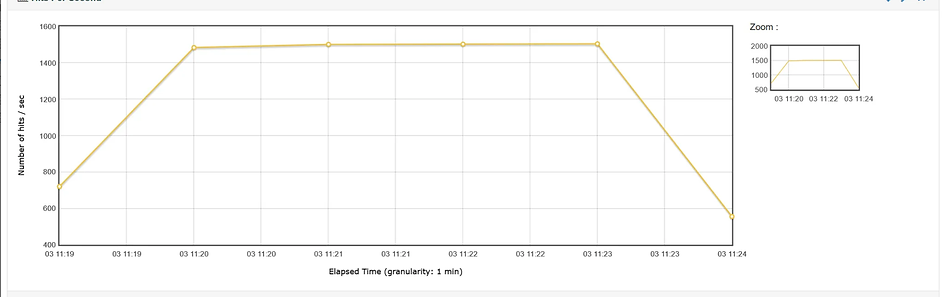

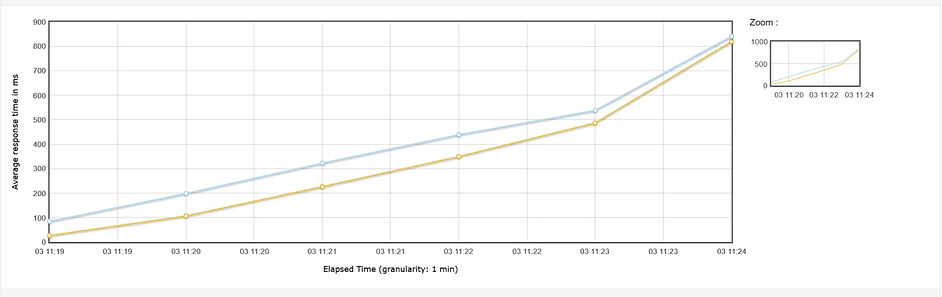

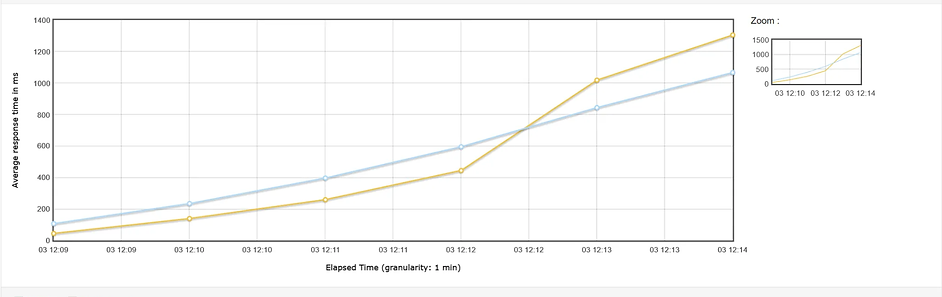

The collected quantitative data (performance metrics, resource utilization) were tabulated and visualized using charts and graphs to facilitate comparison across the different reverse proxy solutions. Statistical summaries were generated where appropriate. Qualitative data from logs were analyzed to understand the behavior of each proxy in specific scenarios, particularly in response to malformed requests and network disruptions. The efficacy of each reverse proxy was evaluated based on its ability to:

-

Maximize throughput and minimize latency.

-

Effectively block or mitigate malformed requests with minimal impact on valid traffic.

-

Maintain stability and performance under high concurrency and adverse network conditions.

-

Exhibit efficient resource utilization.