Inclusive & Assistive Products

ISDN2001/2002: Second Year Design Project

Key features

WordCraft anchors words in spatial 3D environments—letting dyslexic learners see vocabulary as interactive models, experience it in three dimensions. Built on neuroscience principles, the system celebrates visual thinking by forging deeper grapheme-phoneme-meaning bonds that traditional phonics often misses.

Doggy

"Feels like a lovable toy, works like a powerful learning tool—blending seamlessly into playtime while building language skills."

✓ The LCD screen provides real-time image feedback.

✓ Store every wonderful moment and audio.

✓ Pocket-size & portable. The package comes with a lanyard that can be hung on a bag.

-

Core Hardware: Raspberry Pi 0 2W

-

Imaging: OV5674 camera unit

-

Interface: 2.4inch LCD screen & real-time feedback

Magic Box

The magic box lets users:

✓ Rotate and modify 3D models for better recall

✓ Explore words through multi-sensory layers (visual, auditory, tactile)

✓ Learn via spatial memory—aligning with dyslexic cognitive strengths

-

Core system: Raspeberry Pi 5

-

Display: 13.3" LCD with pseudo-3D holographic projection

-

Depth Illusion: Multi-plane LED arrays create volumetric 3D effects within the enclosure

-

Interaction: Touch-enabled for model manipulation

How it works?

System Diagram

Subsystem I camera device

The system runs on Debian Bullyese OS and uses curses for the UI and OpenCV for image display/processing. Built on a Raspberry Pi 0 2 W, it integrates a camera module for image capture and PyAudio for audio recording, enabling combined media acquisition in a compact embedded setup.

Subsystem II display hub

The system features a 13.3" LCD with pseudo-3D holographic projection, enhanced by multi-plane LED arrays that generate volumetric depth illusions within the enclosure. The display is touch-enabled, allowing direct interaction with 3D models through intuitive manipulation gestures.

This interactive 3D model software uses Three.js for real-time mesh deformation via direct touch input. It employs raycasting for touch-to-mesh interaction and performs vertex displacement on BufferGeometry with spatial partitioning for performance. The system features dynamic brush visualization, PBR materials with ACES tone mapping, and separate modes for navigation and sculpting. Touch-specific optimizations include expanded hit detection and adjusted camera controls for finger input. Geometry updates are optimized through targeted buffer updates and on-the-fly normal recalculations.

The sculpting function enables real-time mesh deformation through direct user interaction, utilizing Three.js's raycasting system to map screen touches to 3D surface coordinates. When activated, it modifies vertex positions in the model's BufferGeometry, applying either additive extrusion or subtractive intrusion based on face normals, with intensity governed by a configurable falloff curve (1-(d/r)^4). The system employs spatial partitioning in a 3D grid structure to efficiently process only relevant vertices within the brush radius, which dynamically scales with the model. Vertex position buffers are flagged for GPU updates while computeVertexNormals() maintains proper lighting continuity. A visual indicator constructed from RingGeometry provides real-time feedback, rendered with transparent double-sided material. The entire pipeline executes within the same frame cycle, maintaining 60fps performance even during continuous touch gestures through optimized buffer management and selective vertex processing.

Subsystem III oprimized pipeline

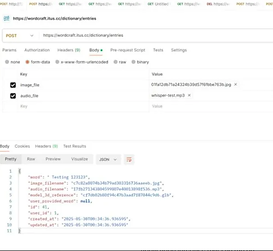

FastAPI is used to provide a RESTful API for the frontend application. The backend server retrieves and stores the data. It also handles the task of sending the video and audio data to AI APIs to do transcribe and 3d model generation tasks.

Electron is used to create the application interface that can run on multiple platforms without multiple codebases. The enormous community also enables developers to develop applications with libraries to save time and effort.